Top 6 AI SAST tools in 2026

February 2, 2026

AI SAST (AI-powered static application security testing) is no longer just “a SAST tool.” In 2026, the best AI-powered SAST tools help teams reduce noise, triage faster, and move from findings to fixes without burning out developers or AppSec.

Traditional SAST still matters because it can catch issues early (before code runs), but teams have learned the hard way that “turn it on in CI” often produces a wall of alerts and low trust. In 2026, AI changes the workflow in three practical ways:

Detection: tools are now able to detect business logic flaws and authentication issues

Triage: grouping and explaining issues so teams spend less time sorting noise.

Prioritization: using more context (code + ownership + reachability/exposure) to decide what truly matters.

Fix guidance: generating fix suggestions and (in some platforms) validating them before they reach a PR.

What buyers should expect from “best AI SAST tools” in 2026:

A clear story for “where AI is used” (detection vs triage vs remediation), not vague “AI-powered” marketing.

Developer-native delivery (IDE + PR feedback) so issues are fixed in flow, not routed into ticket purgatory.

Fix suggestions with guardrails (quality gates, retesting, confidence scoring, or human-in-the-loop checks).

Forward-looking “agentic” patterns (tools that can plan/execute/validate remediation steps, often via IDE assistants and emerging integration standards).

What Is SAST?

Static Application Security Testing (SAST) is a method of finding security issues by analyzing source code before it runs. Instead of testing a live application, SAST scans code at rest, typically during development or in CI/CD pipelines, to catch vulnerabilities early, when they are cheaper and easier to fix. Common issues SAST looks for include injection flaws, insecure authentication logic, unsafe data handling, and misused APIs. Because SAST runs without executing the app, it works well for “shift-left” security and fits naturally into modern development workflows.

SAST vs. AI SAST (AI-Powered vs. AI-Native)

Traditional SAST and AI SAST differ mainly in how intelligence is applied. Most “AI-powered SAST” tools start with classic SAST detection, rules, patterns, and data-flow analysis, and then use AI to augment the results. In this model, AI helps after the scan by explaining findings, grouping similar issues, reducing noise, prioritizing alerts, or suggesting fixes. The core detection engine is still traditional SAST; AI acts as an assistant that makes the output more usable for humans.

AI-native SAST takes a fundamentally different approach. Instead of bolting AI onto the end of a legacy scanner, AI-native tools use large language models (LLMs) and contextual reasoning as part of the scanning process itself. These systems analyze code more like a security engineer would: understanding intent, control flow, and business logic, not just matching patterns. The result is fewer false positives, better handling of complex logic flaws, and clearer, more actionable guidance for developers. In short, AI-powered SAST improves how teams consume SAST results, while AI-native SAST rethinks how vulnerabilities are found in the first place, an important distinction as buyers look toward 2026 and beyond.

If you'd like to dig deeper into the topic, checkout our white-paper on AI-native SAST.

Best AI-powered SAST tools to evaluate for 2026

Below are the best AI SAST tools to know in 2026, written for developers, AppSec engineers, security leaders, and founders. The tools are listed in the exact order requested.

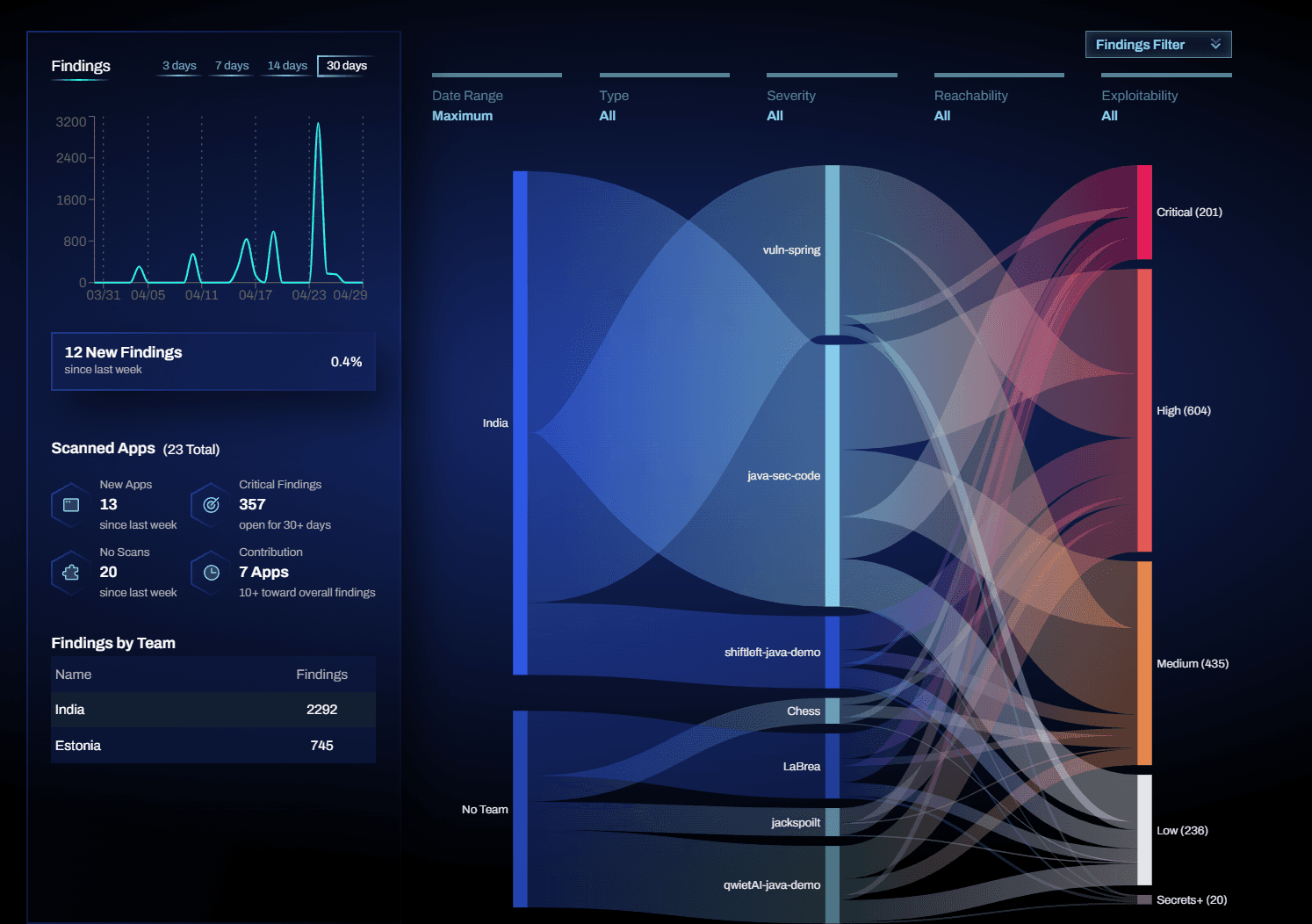

Corgea

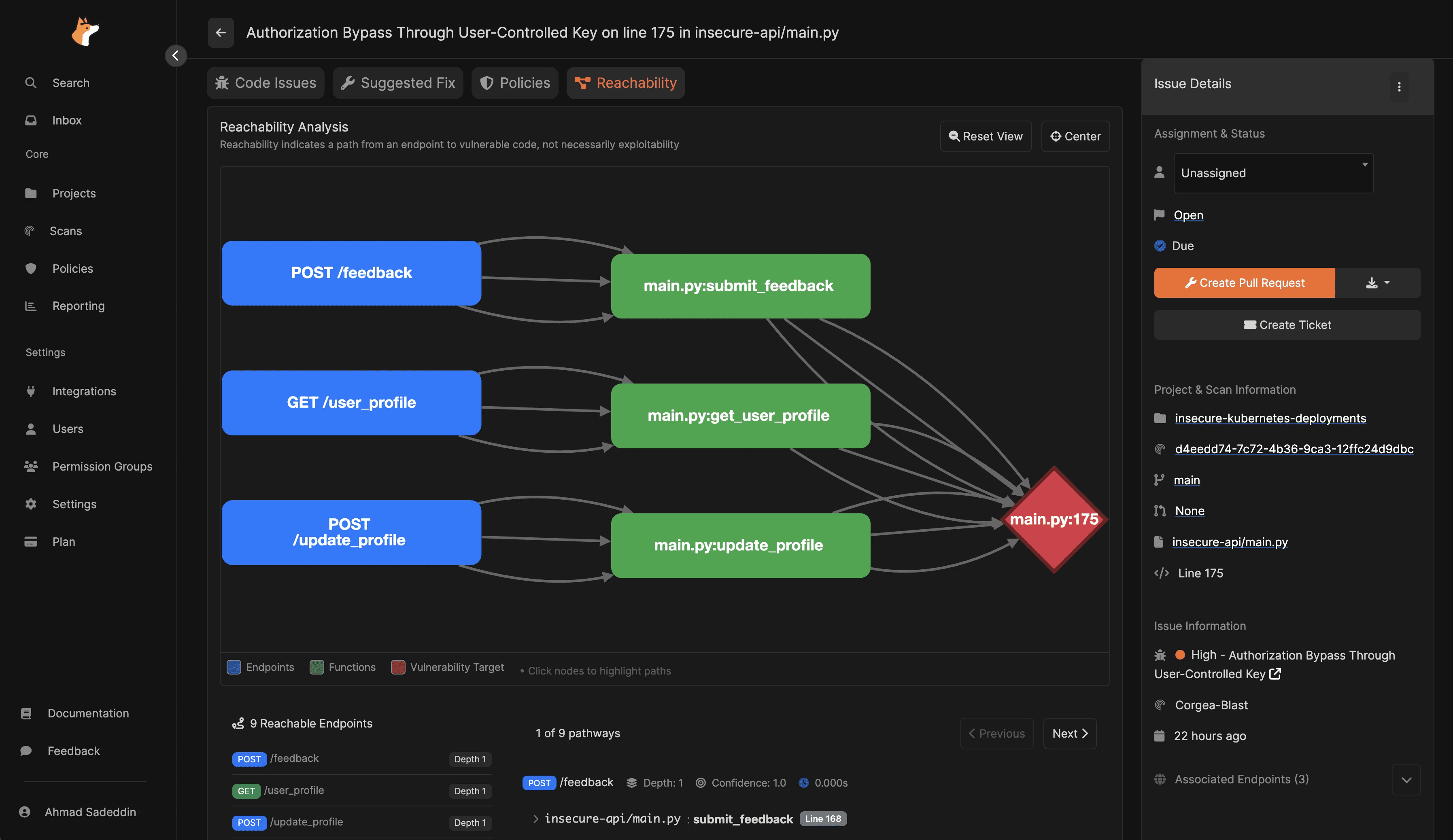

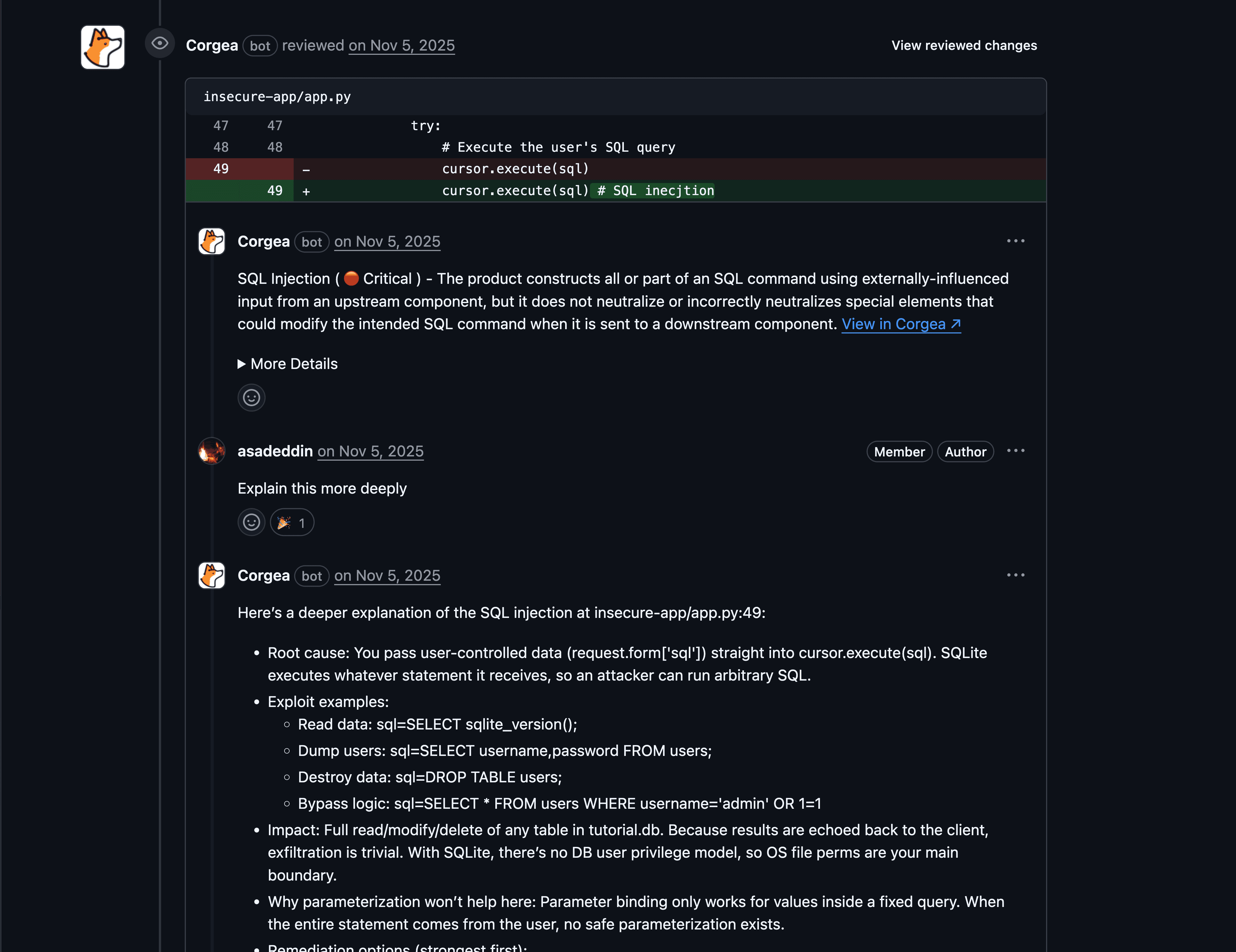

Corgea is an AI-native SAST platform built around modern LLM-driven analysis. In 2026 terms, it’s one of the clearest examples of “AI SAST” where AI isn’t just explaining results, it’s central to how the tool reasons about code context and security impact.

What it is

A SAST tool designed to “think about code” contextually (including logic and intent), then produce developer-friendly output: fewer noisy alerts, clearer explanations, and actionable fix guidance.

How it uses AI

Uses large language models to understand code context and logic, aiming to reduce false positives compared to pattern-only detection.

Uses PolicyIQ to let teams provide business and environment context in natural language, which is used to improve vulnerability detection accuracy, false-positive identification, and fix generation.

Leans heavily into developer workflow integration (IDE + PR-level fix suggestions), aiming to keep remediation in-flow.

Best use cases

Teams drowning in traditional SAST noise and looking for higher signal and clearer “why this matters” explanations.

Orgs that care about business-logic flaws and context-dependent issues that are hard to express as static rules.

Security leaders who want to encode org-specific context (architecture, data handling constraints, compliance requirements) without building a custom rules DSL from scratch.

Who it’s best for

AppSec teams and engineering orgs that want the most AI-native approach in this list, especially those optimizing for noise reduction + developer adoption + fix guidance in workflows.

Key strengths

Clear AI-native posture: AI is positioned as part of contextual reasoning, not only summarization.

Policy-driven contextualization (PolicyIQ) to tailor results and fixes to how your business actually works.

Developer-friendly integrations and PR-oriented fix suggestions (helps security move at developer speed).

Potential limitations

Corgea’s approach is explicitly “AI-native,” which means you should validate it on your own codebase and threat model, AI-native SAST can reduce false positives, but it’s not expected to eliminate them entirely.

If you make purchasing decisions on Magic Quadrants, then a traditional tool might be the way to go.

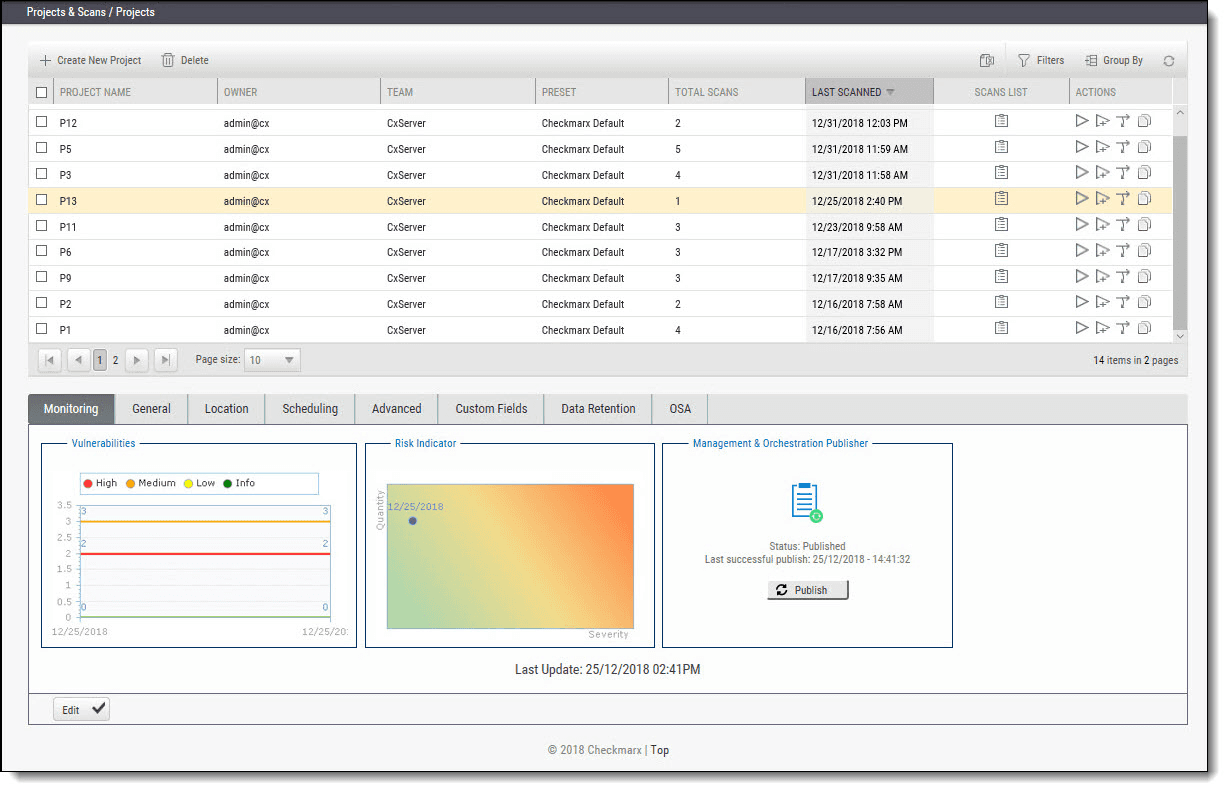

Checkmarx

Checkmarx is a long-established AppSec platform known for enterprise SAST. In 2026, its story is less “AI-native SAST from scratch” and more “enterprise SAST + AI to accelerate customization and remediation”, including agentic remediation concepts in IDE workflows.

What it is

An enterprise application security platform where SAST is a core capability, with additional modules across code and supply-chain security.

How it uses AI

AI Query Builder: uses AI to help teams customize SAST detection logic (queries) so the scanner better understands organization-specific patterns and requirements.

“AI remediation at scale”: positions AI as a way to automate or accelerate fixes across multiple scanning categories (SAST, SCA, secrets, IaC).

Developer Assist: supports “agentic AI remediation” in the IDE, where the platform feeds context to an AI agent (via an MCP server) to generate remediated code that developers can accept or refine.

Best use cases

Large organizations that already run enterprise-grade SAST and need to reduce time-to-customize and time-to-remediate across many teams.

Platform/security teams that want IDE-time scanning and remediation support, especially where developers are using AI coding assistants and need security feedback in the same loop.

Who it’s best for

Security leaders and AppSec teams in mature environments (many repos, complex governance, multiple scanning categories) who want remediation workflows while keeping an enterprise SAST backbone.

Key strengths

Strong enterprise orientation: customization and governance are treated as first-class needs (not an afterthought).

AI-assisted detection customization (query building) helps reduce the “we can’t express our codebase patterns” problem.

Potential limitations

The breadth and flexibility that make Checkmarx attractive for enterprises can be heavy for smaller teams, you should expect more platform-level setup and operational ownership than “drop-in SAST.”

There's a limit on traditional SAST tooling being unable to detect more complex vulnerabilities such as business logic flaws and missing authentication.

Access/experience can vary by IDE and AI-assistant tier (the Developer Assist docs differentiate limited vs full access and list supported environments).

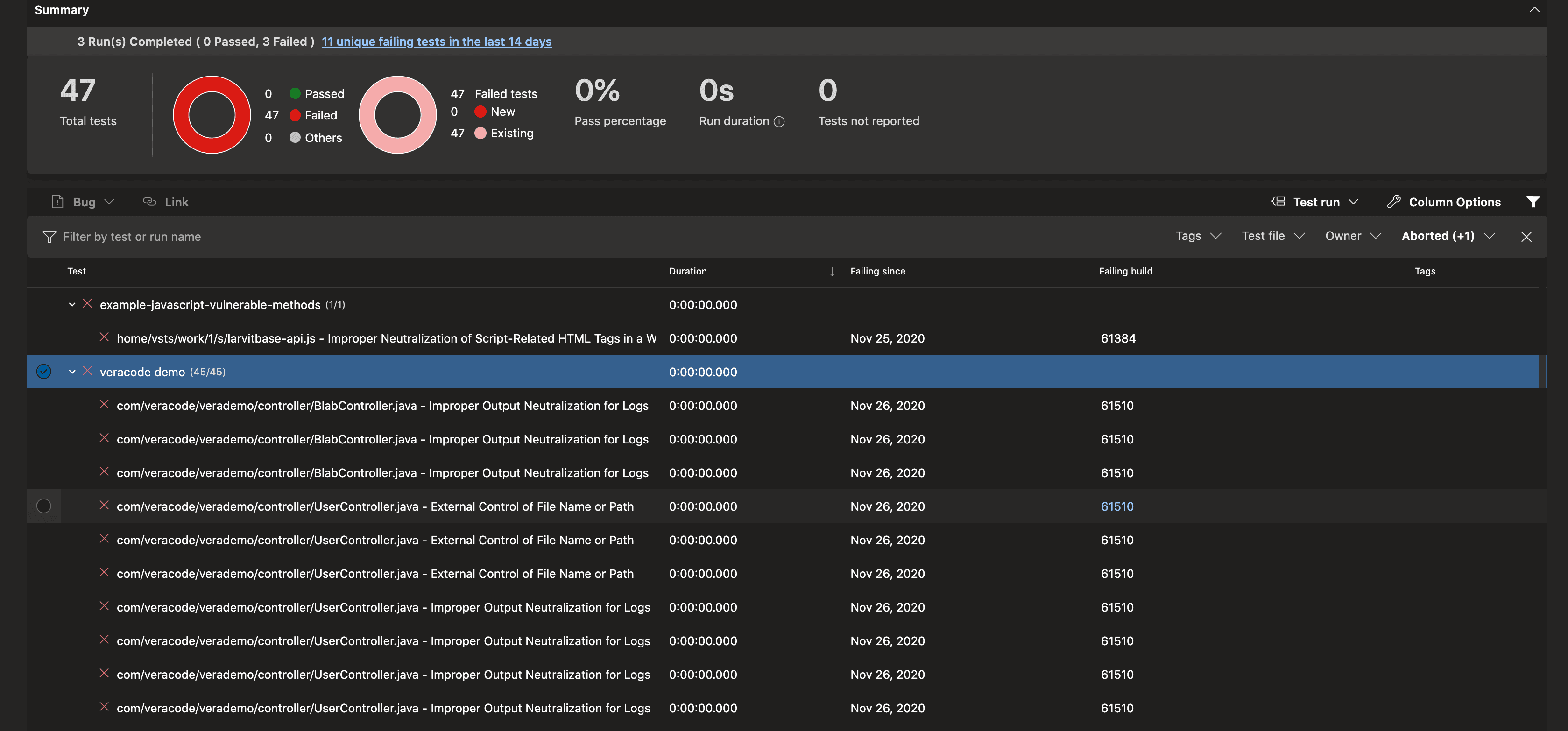

Veracode

Veracode is a major application security vendor, and its 2026 AI story is strongly centered on AI-assisted remediation via Veracode Fix, turning static findings into secure code patches with guardrails and repeatability.

What it is

A platform for application security testing with a well-known static analysis foundation, plus Veracode Fix for AI-generated remediation guidance and patches.

How it uses AI

Veracode Fix generates AI-created code patches that developers can review and apply, aiming to reduce research and fix time.

In the docs, Fix is described as using a machine-learning model plus retrieval-augmented generation (RAG) against Veracode remediation data to generate secure patches from Pipeline Scan findings.

Veracode also emphasizes “responsible design” and quality gates to reduce hallucination risk and avoid retaining customer code.

Best use cases

Enterprises trying to burn down security debt where “finding flaws faster” is not the bottleneck, remediation capacity is.

Teams that want AI-generated fixes but with a strong emphasis on consistency, repeatability, and governance-friendly assurances.

Developers who prefer batch-style remediation (single fix vs batch fix flows are described directly in the Fix docs).

Who it’s best for

Security leaders and engineering orgs that need dependable, workflow-integrated remediation help, especially when AppSec teams are trying to scale fixes across large portfolios.

Key strengths

Concrete “findings → patches” workflow: Fix is explicitly built to take static findings (including CWE/severity/location) and produce secure patch options.

Multiple ways to integrate into developer workflows (IDE, CLI, CI/CD are explicitly called out).

Clear disclosure about scope: Fix resolves findings from Pipeline Scan, which is useful clarity when you’re designing an end-to-end workflow.

Potential limitations

Veracode Fix’s scope is not universal across every Veracode scanning mode (the docs state it resolves findings from Pipeline Scan, not Upload and Scan).

There's a limit on traditional SAST tooling being unable to detect more complex vulnerabilities such as business logic flaws and missing authentication.

Like all AI remediation, patches still need engineering review to ensure correctness, performance, and compatibility with local conventions, even when guardrails are strong.

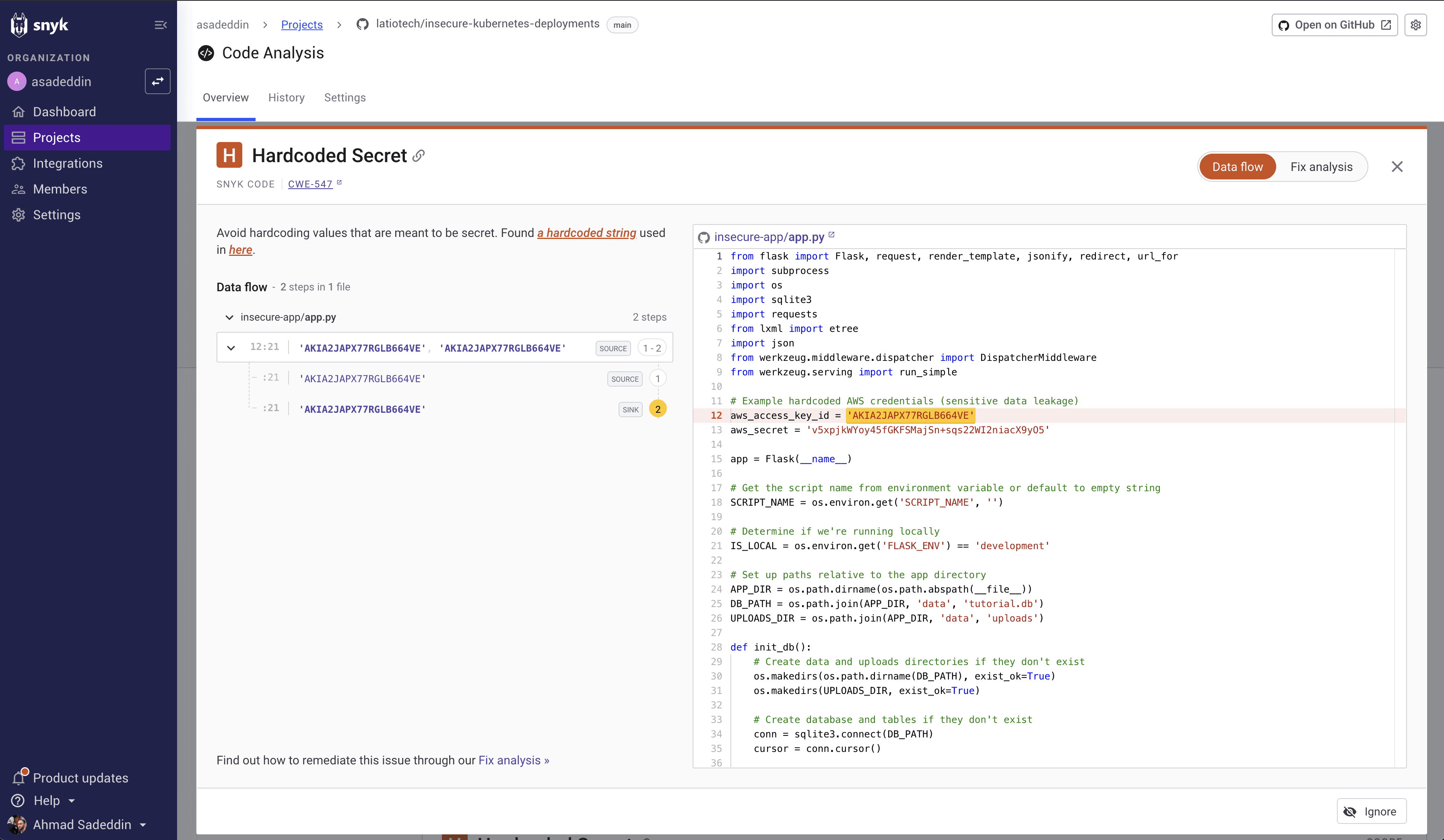

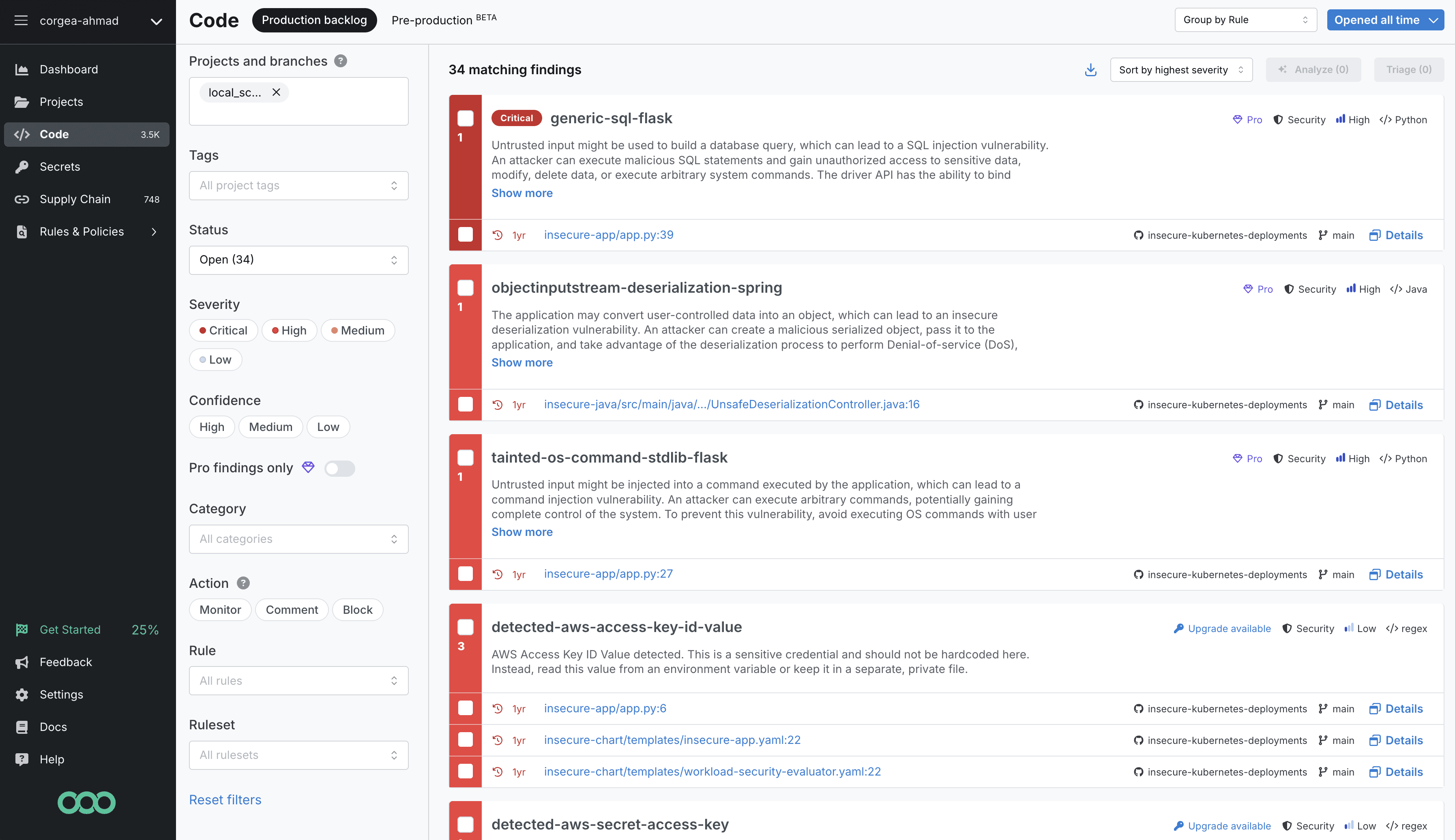

Snyk

Snyk is widely adopted as a developer-first security platform, and its AI SAST approach is grounded in DeepCode AI for detection and Snyk Agent Fix for auto-fix workflows. In 2026, Snyk’s positioning leans into “fast, IDE-native scanning with fewer false positives and validated fix suggestions.”

What it is

Snyk Code is Snyk’s SAST tool for first-party code, designed to run in developer workflows (IDE, repos, CI/CD) and surface actionable findings early.

How it uses AI

DeepCode AI: described as a code analyzer built on 25M+ data-flow cases, 19+ languages, and multiple AI models, designed to find, prioritize, and autofix vulnerabilities.

Agent Fix workflow: in Snyk’s docs, “Generate Fix” produces up to five potential fixes and then automatically retests the fix for quality using Snyk Code’s engine.

Snyk also positions its auto-fix approach as not “LLM-only” by emphasizing pre-screening/validation to reduce risky hallucinations (described in Snyk’s own AI trust messaging).

Best use cases

Teams that want a “developer-first” SAST tool that works where developers already live, especially in IDEs and pull/merge workflows.

Orgs where speed matters and SAST must not slow delivery (Snyk heavily emphasizes real-time/IDE workflows).

Programs that want AI-assisted fixes but also want automated screening so developers see higher-quality recommendations.

Who it’s best for

Engineering managers, founders, and AppSec teams looking for SAST tools for developers, especially where the success metric is adoption and fast remediation, not just scanning coverage.

Key strengths

Explicit “AI-based engine results in fewer false positives,” framed as a better day-to-day developer experience.

DeepCode AI scale claims (data-flow cases, multi-model approach, language coverage) map well to modern polyglot environments.

Fix workflow includes auto-retesting/quality checks after generation, which is the kind of guardrail buyers should demand in 2026.

Potential limitations

AI-generated fixes are not universal; in practice, coverage varies by language, flaw type, and configuration, so you’ll want a proof-of-value run on your own repos and top vulnerability classes.

There's a limit on traditional SAST tooling being unable to detect more complex vulnerabilities such as business logic flaws and missing authentication.

As with any AI-assisted remediation, you still need good tests and code review discipline to ensure fixes don’t change semantics or introduce regressions.

Semgrep

Semgrep is known for developer-friendly static analysis, and its 2026 AI angle is Semgrep Assistant: a layer that combines static analysis with LLMs to reduce noise, explain findings, and provide remediation guidance (including autofix suggestions when appropriate).

What it is

A SAST-first platform with strong rule-based scanning roots, plus an AI assistant that focuses on triage, noise filtering, and fix guidance in PR workflows.

How it uses AI

Semgrep Assistant is described as combining static analysis and LLMs “so teams only deal with real security issues.”

Noise Filtering: claims to filter likely false positives by understanding mitigating context, reducing the number of findings to triage (Semgrep cites a “20% the day you turn it on” reduction).

Remediation: provides step-by-step guidance and can suggest autofix snippets when it identifies a true positive (with confidence thresholds and workflow controls in the docs).

“Memories” and auto-triage: uses your triage history to avoid repeating the same triage decisions, and can suggest findings to ignore with explanations.

Best use cases

AppSec teams that want strong control over static analysis, plus AI help where teams actually struggle: triage and developer guidance.

Orgs that want fewer interruptions for developers (noise filtering can suppress PR comments for likely false positives while keeping findings available for security review).

Teams that need a “learning system” where triage decisions become reusable context over time.

Who it’s best for

AppSec engineers and developer-platform teams that care about developer experience but still want a strong static analysis foundation and customization model, augmented by AI for the human bottle-necks.

Key strengths

Very explicit AI value: noise filtering, remediation guidance, and org-specific learning (“memories”).

Strong PR-centric workflow: explanations and suggested fixes appear where developers work.

Transparency in docs about how and where features apply (e.g., remediation, auto-triage, noise filtering behavior).

Potential limitations

Some assistant features are not available for custom/community rules (Semgrep calls this out directly for explanations).

Noise filtering is explicitly labeled beta in the docs, which matters if you’re planning to operationalize it at scale.

Qwiet AI

Qwiet AI (acquired by Harness) positions itself around speed, reachability, and automation: scan fast, reduce false positives, and provide AI-generated fixes via AutoFix. Its technical framing leans heavily on graph-based analysis (Code Property Graph) plus ML and LLM-driven remediation suggestions.

What it is

A SAST platform focused on developer productivity: quick scans, fewer findings, and faster remediation cycles—marketed as “SAST that won’t slow you down.”

How it uses AI

Uses a Code Property Graph (CPG) approach that integrates data flow, control flow, and syntax tree analysis for better context and prioritization.

AutoFix: Qwiet’s docs state AutoFix uses large language models to generate code fix suggestions (and steps), using CPG-derived context; the docs also state the LLM runs in Qwiet’s virtual private cloud and that customer data is not used for model training.

Qwiet reports (in its own materials) large reductions in false positives and faster remediation, and provides a customer bakeoff-style report as supporting evidence.

Best use cases

Teams where CI time is precious and “SAST that takes forever” is a non-starter.

Orgs prioritizing reachability and reduction of low-confidence findings, especially when developer time spent triaging is the bottleneck.

Programs that want AI fix suggestions directly tied to flow/trace context rather than generic snippets.

Who it’s best for

Engineering orgs and DevSecOps teams that measure success in time saved and triage reduction, and want a SAST tool with an explicit “fast + reachable + fixable” focus.

Key strengths

Speed and productivity claims are unusually specific (including a vendor-published bakeoff report showing scan time and false positive deltas across real apps).

AutoFix implementation details are clearly documented, including deployment model claims (VPC) and a statement that customer data is not used to train models.

The docs are candid about AI limitations and the need for review and tests when applying suggestions, this is the right posture for 2026 AI remediation.

Potential limitations

AutoFix is not enabled by default per the docs, and it only generates suggestions for a limited subset of top findings (helpful for focus, but important to understand for coverage expectations).

The strong quantitative claims (scan time multipliers, false positive deltas, developer hours saved) are often based on vendor materials; treat them as directional and validate with your own repos, tuning, and workflows.

There's a limit on traditional SAST tooling being unable to detect more complex vulnerabilities such as business logic flaws and missing authentication.

How to choose the right AI SAST tool in 2026

In 2026, the “right” AI SAST tool is the one that produces trusted signal and fits your engineers’ flow. That usually matters more than the longest feature list.

A practical selection approach that works for most teams:

Decide what you’re buying AI for (detection, triage, remediation). Many platforms do one exceptionally well and the others “well enough.” Start by naming your bottleneck: noisy findings, slow prioritization, or slow fixes.

Run a realistic pilot that measures workflow outcomes, not just vulnerability counts. In 2026 terms, the metrics that matter tend to look like:

Mean time to a clean triaged list (not just scan time).

Reduction in duplicate/low-confidence findings (noise).

Fix acceptance rate and regression rate (do AI fixes help, and do they break things?).

Demand safe remediation ergonomics. In 2026, AI-generated fixes should come with guardrails (quality gates, retesting, confidence thresholds, or review workflows) because “fast wrong fixes” are worse than slow correct ones.

Finally, evaluate how future-ready the platform is for AI-driven development. Analyst guidance notes that SAST vendors are moving toward deeper integration with AI development environments and more AI-driven remediation experiences, so it’s worth checking how each vendor is implementing that in practice today.

Ready to fix with a click?

Harden your software in less than 10 mins'